How to preserve, cite, and design websites for the long-term future (part two)

- Deborah Thorpe

- June 8, 2023

By Deborah Thorpe, Research Data Steward, University College Cork Library

THE ‘DIY’ SOLUTION TO CAPTURING AND RECREATING WEBSITES

I can’t collect and preserve everything. I think that’s a romantic notion and nobody can achieve that… What I can achieve is a curated, representative collection… Taking a snapshot of a moment in time, identifying content that tells a story [or] answers a question. (J. J. Harbster, 2022)

Capturing and preserving websites yourself

It is possible to record and replay web archives yourself, in a way that will enable yourself or future users to replay dynamic websites.

To archive your project website yourself, you might choose to use a web crawler such as Heritrix or Conifer (previously known as Webrecorder.io) to visit your web addresses and snapshot the information as WARC or WACZ files. Another useful tool is ArchiveWeb.page, a Chrome extension and desktop app developed by Webrecorder. The tool allows you to surf through web pages capturing as you browse, enabling high quality archives.

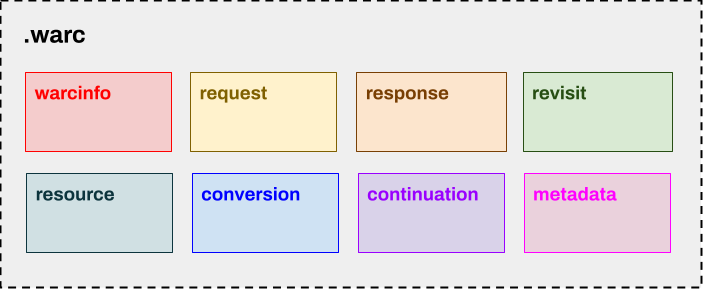

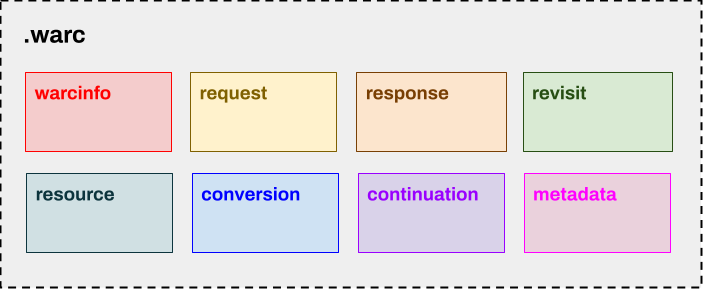

The WARC (Web ARChive) format specifies a method for combining multiple digital resources into an aggregate archival file together with related information. WACZ is a Zip file-style media type that allows web archive collections to be packaged and shared on the web as a discrete file and is relatively new and backed by the Webrecorder/Conifer project.

The WARC file includes ‘metadata about its creation and contents, records of server requests and responses, and each server response’s full payload’. So, ‘everything that was done in order to record the transfer of information from a web server to its reader’. When the files are replayed with a WARC viewer, they appear how they were when they were captured.

But once you have collected web pages as WARC/WACZ files, how do you preserve these files to ensure that they, themselves, are available in the future?

The WARC/WACZ files can be deposited in a data repository, such as Zenodo. Attention should be paid to the descriptive metadata that is created to accompany these website files.

With regards to third-party websites, bear in mind that collecting content and putting it in a repository like Zenodo is a breach of copyright, unless you have permission from the owner. This is not an issue for single page captures with, for example, Perma.cc or for submitting content to the Wayback Machine (in the latter case Archive.org themselves deal with any claims of copyright infringement) – but it is relevant if you are doing captures of other people’s websites with, for example, Heritrix or Conifer with the intention of digitally preserving them.

Anyone who wants to recreate the content that has been captured will need to use software to play the files back. An example would be OpenWayback, used to ‘play back’ archived websites in the user’s browser. Or you would use Webrecorder player to do it offline, or Replayweb.page to view web archives from anywhere, including local computer or even Google Drive.

It is useful to include a link and/or instructions on how to use these types of software in the metadata for your repository object. Doing this is the ‘Interoperable’ and ‘Reusable’ in the FAIR acronym; the ‘data’ is no good if it is not in a standard format (i.e. WARCs) or if you do not create instructions on how they can use it and/or what tools they need to view it.

Archiving WordPress websites

WordPress powers 43% of all websites on the internet.

Due to its robust features, many of the top brands use WordPress to power their websites including Time magazine, Facebook, The New Yorker, Sony, Disney, Target, The New York Times, and more.

WordPress’ popularity as a website builder belies the idea that it is a blogging platform. Its WYSIWYG (What you see is what you get) content creation model and plugin-based development features make it a popular website creator and content management system (CMS) for many users who might otherwise lack the technical or financial means to create websites. WordPress is built using PHP and so the webpages are generated from a server and not downloadable as ‘flat’ files that can be easily preserved and recreated in a browser. The risk with PHP is when it is not updated, and so websites can be lost if the PHP version is not managed.

However, WordPress has a number of features to output the content to a folder containing the informational pages with a .html filetype as well as the relevant ancillary images, media files, CSS, Javascript. These can be archived more easily and can be opened in any browser. It has a natively built in XML exporter which allows all the content and media to be exported and then uploaded into another WordPress environment. This is useful if the site needs to be migrated. There are also numerous plugins available which will convert the site to flat HTML. Using one of these tools allows the user to download the whole site (media included) as flat HTML files which are then very easy to add to a digital repository.

The Internet Archive also gets a feed of new posts made to WordPress.com blogs and self-hosted WordPress sites, crawling the posts themselves, as well as all of their outlinks and embedded content – about 3,000,000 URLs per day.

‘Archive Team’

‘Archive Team’ sits somewhere between a ‘service’ and the more ‘DIY’ approaches that have been outlined above. It is described as ‘a loose collective of rogue archivists, programmers, writers and loudmouths dedicated to saving our digital heritage’. This group of over 100 volunteers actively archives content by uploading WARCs to archive.org on the archiveteam collection. The material that is archived is available in the Wayback Machine, and this is generally the recommended way of accessing it. Material crawled by the Archive Team is labelled in the Wayback Machine as having been captured by this group.

The method of requesting an archive of a smaller website (defined as up to a few hundred thousand URLs) is through their Crowdsourced Crawler ArchiveBot. You can join their IRC chat, provide the bot with a URL to start with and it grabs all content under that URL, records it in a WARC file and uploads it to the ArchiveTeam servers for eventual injection into the Wayback Machine. Archive Team are happy to archive sites that are known to be going away soon (on ‘Deathwatch’) so this would be a useful option if you know that your website is at risk and want to capture it quickly. Archive Team can always be reached by email at archiveteam@archiveteam.org.

Two useful tools that require more technical know-how

Web-archive-replay

Web-archive-replay provides access to a web archive file (either WARC or WACZ format) in a completely self-contained static web project. This means that you can publish a curated web archive, alongside contextual information, as a standalone digital exhibit website. An example has been created here. If you click on ‘Demo Archive’, you arrive at a demo interface where you can select a page and you will be taken to an embedded page that displays a local web archive WACZ file. This page displays, in the top right corner the time and date when it was created.

It is possible to host your WACZ file in Zenodo for long-term preservation with some instructions for the integration being on this setup guide. There are still a major challenges to be overcome with this integration, as the Zenodo file API seems to have a rate limit which is triggered by the ReplayWeb.page embedding that is used by web-archive-replay. Perhaps this is something that Zenodo may review for better integration in the future. Following Digital Librarian Evan Will on Mastodon is likely to be a good way to hear about any updates.

ReproZip-Web

ReproZip-Web is a prototype tool designed to quickly pack and unpack dynamic websites. The tool combines ReproZip and Rhizome’s Webrecorder project to a) trace the back end of the web server and b) record the front end files that come from external locations as WARC files. The two sets of files are then consolidated into one ReproZip package containing everything that is needed to reproduce the resource in a different environment. The server-side element of this web archiving process does require complex access and permissions, as well as technical understanding, and so we would advise that researchers have support from IT Services if they choose to go down this route.

Anyone who wishes to view the captured web resource uses ReproZip Unpacker to replay the ReproZip packgage, allowing them, on any type of computer, to access and replay the original web artifacts in their original computational environment. Thus, this is, is an emulation-based web tool and it seems to be the first of its kind.

The ReproZip package could then be preserved in a repository such as Zenodo, ready to be served up by the user as an accurate record of a dynamic website at a particular point in time.

The UCC Research Data Service is following with great interest the progress of this work-in-progress app, and more information can be found on the project GitHub page and the project’s grant proposal, which is available to read online. Though the ReproZip-Web workflow requires a more advanced level of understanding and admin permissions, it also seems that the technological developments will feed back into the Webrecorder project, thereby improving services such as Archiveweb.page.

A sensible approach to take is to combine submission of URLs to the Wayback Machine to ensure coverage of your webpages there with self-archiving using tools such as WebRecorder. This dual approach has been taken, for example, by Saving Ukrainian Cultural Heritage Online, a project that has worked to rapidly capture over 1,500 Ukrainian museum and library websites, digital exhibits, text corpora, and open access publications.